HORSES3D is a 3D parallel code that uses the high–order Discontinuous Galerkin Spectral Element Method (DGSEM) and is written in modern object–oriented Fortran (2008+), see Ferrer et al. [2020]

The code is currently being developed at ETSIAE–UPM (the School of Aeronautics of the Universidad Politécnica de Madrid). HORSES3D is built on the NSLITE3D code by David A. Kopriva, which is based on the book “Implementing Spectral Methods for Partial Differential Equations” Kopriva [2009].

HORSES3D uses a nodal Discontinuous Galerkin (DG) Spectral Element Method (DGSEM) for the discretization of the different physics implemented. In particular, it permits the use of the Gauss–Lobatto version of the DGSEM, which makes it possible to construct energy–stable schemes using the summation–by–parts simultaneous–aproximation– term (SBP–SAT) property. Moreover, it handles arbitrary three dimensional curvilinear hexahedral meshes while maintaining high–order spectral accuracy and free–energy stability.

The shared–memory parallelization distributes the most expensive tasks (i.e. the volume and surface integral computations) to different threads using OpenMP directives.

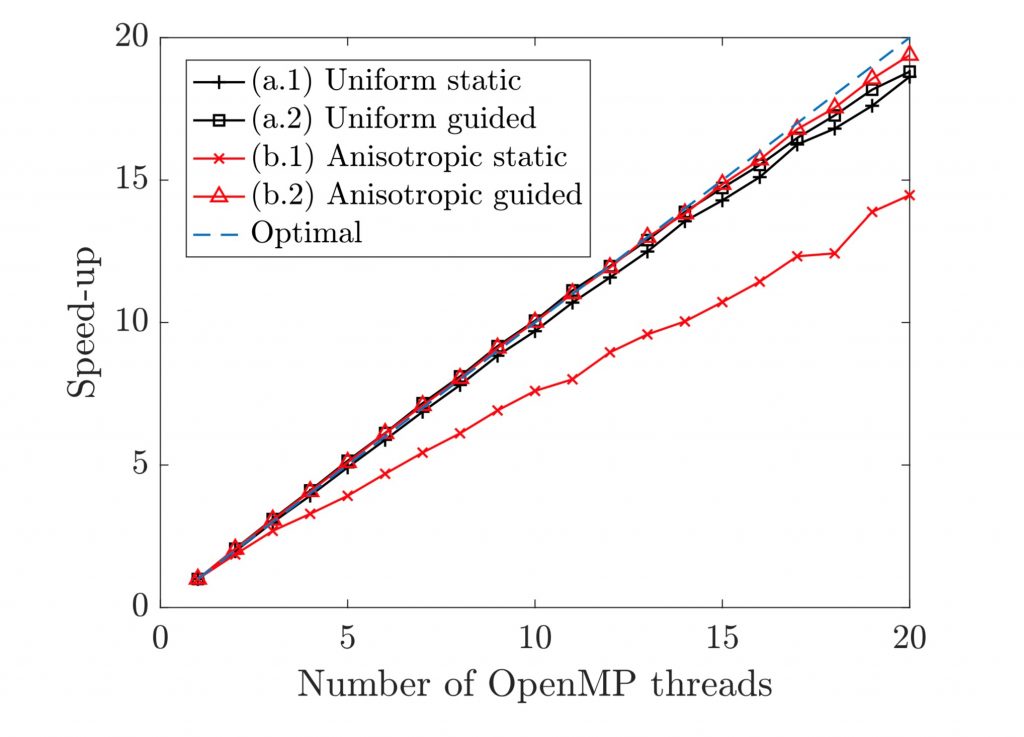

In this section, a strong scalability test is presented for the flow around a sphere (the details can be found in Rueda-Ramírez [2019]). Roe is used as the inviscid Riemann Solver and the BR1 scheme for the viscous boundary terms.

Two common OpenMP schedules were tested: static and guided. The static schedule divides the loops of elements and faces into equal–sized chunks (or as equal as possible) for the different available threads, i.e.

respectively. The guided schedule gives a smaller chunk of loop iterations to each thread. When a thread finishes, it retrieves another chunk to compute. The chunk size starts large and decreases as the loop computation is completed.

The computation time needed for taking 100 time steps (RK3) was measured using a 2–socket Intel CPU with 2×20 cores at 2.2GHz and 529 GB RAM. Two discretizations with roughly the same number of DOFs (±5%) were used:

Each of the simulations was run five times and the computation time was averaged. The results are shown in Figure 5.

The benchmark solved herein is the inviscid Taylor–Green Vortex (TGV) problem. We consider a coarse mesh with 323, and a fine mesh with 643 elements, and we vary the polynomial order from N = 3 to N = 6. The solver scalability curves are depicted in Figures 6 and 7. We consider both pure MPI, and Hybrid (OpenMP+MPI) strategies. The number of total cores ranges from 1 to 700, and the number of OpenMP threads, when enabled, is 48.

The 323 mesh, using MPI (Fig. 6) does not show an increase in the solver speed–up when increasing the number of cores. Since this mesh is coarse (81 elements per core when using 400 cores, 54 elements per core when using 600), it seems normal that communication time is the bottleneck in this simulation. However, with this configuration it is possible to obtain speed–ups of 100 using 100 cores, depending on the polynomial order. The Hybrid strategy (Fig. 6), on the other hand, is capable to maintain high speed–ups before the stagnation, approximately on 500 cores. The higher the polynomial order, the higher the speed–up since more calculations inside the elements are performed (i.e. they do not involve communication).

The medium mesh is represented in Fig. 7. Regarding the MPI strategy, Fig. 7, we observe an improvement when compared to the coarser mesh in Fig. 6.

We do not observe stagnation for high polynomial orders, and in the rest, the stagnation occurs after using approximately 400 cores. The Hybrid strategy in Fig. 7 does not show stagnation in the range considered. Despite being slighly irregular for lower polynomial orders, a straight line is achieved with N = 6.

If you wish to just use Horses3D for running simulations, we recommend downloading a pre-compiled binary distribution package for your distribution from the Downloads page.

If you plan to develop new code or extend the existing functionality, or would just prefer to compile it yourself, there are three ways to get the code

The code is available in GitHub: https://github.com/loganoz/horses3d

Allen, S. M., & Cahn, J. W. (1972). Ground state structures in ordered binary alloys with second neighbor interactions. Acta Metallurgica, 20(3), 423-433.

Balay, S. Abhyankar, M. Adams, J. Brown, P. Brune, K. Buschelman, L. Dalcin, A. Dener, V. Eijkhout, W. Gropp, et al., PETSc users manual.

Cahn, J. W., & Hilliard, J. E. (1958). Free energy of a nonuniform system. I. Interfacial free energy. The Journal of chemical physics, 28(2), 258-267.

Cahn, J. W., & Hilliard, J. E. (1959). Free energy of a nonuniform system. III. Nucleation in a two‐component incompressible fluid. The Journal of chemical physics, 31(3), 688-699.

Cox, C., Liang, C., & Plesniak, M. W. (2016). A high-order solver for unsteady incompressible Navier–Stokes equations using the flux reconstruction method on unstructured grids with implicit dual time stepping. Journal of Computational Physics, 314, 414-435.

Ferrer, E. (2017). An interior penalty stabilised incompressible discontinuous Galerkin–Fourier solver for implicit large eddy simulations. Journal of Computational Physics, 348, 754-775.

Hindenlang, F., Bolemann, T., & Munz, C. D. (2015). Mesh curving techniques for high order discontinuous Galerkin simulations. In IDIHOM: Industrialization of high-order methods-a top-down approach (pp. 133-152). Springer, Cham.

Karniadakis, G., & Sherwin, S. (2013). Spectral/hp element methods for computational fluid dynamics. Oxford University Press.

Kopriva, D. A. (2009). Implementing spectral methods for partial differential equations: Algorithms for scientists and engineers. Springer Science & Business Media.

Manzanero, J., Rubio, G., Kopriva, D. A., Ferrer, E., & Valero, E. (2020). A free–energy stable nodal discontinuous Galerkin approximation with summation–by–parts property for the Cahn–Hilliard equation. Journal of Computational Physics, 403, 109072.

Manzanero, J., Rubio, G., Kopriva, D. A., Ferrer, E., & Valero, E. (2020). An entropy–stable discontinuous Galerkin approximation for the incompressible Navier–Stokes equations with variable density and artificial compressibility. Journal of Computational Physics, 408, 109241.

Manzanero, J., Rubio, G., Kopriva, D. A., Ferrer, E., & Valero, E. (2020). Entropy–stable discontinuous Galerkin approximation with summation–by–parts property for the incompressible Navier–Stokes/Cahn–Hilliard system. Journal of Computational Physics, 109363.

Manzanero, J., Ferrer, E., Rubio, G., & Valero, E. (2020). Design of a Smagorinsky Spectral Vanishing Viscosity turbulence model for discontinuous Galerkin methods. Computers & Fluids, 104440.

Rueda-Ramírez, A. M., Rubio, G., Ferrer, E., & Valero, E. (2019). Truncation Error Estimation in the p-Anisotropic Discontinuous Galerkin Spectral Element Method. Journal of Scientific Computing, 78(1), 433-466.

Rueda-Ramírez, A. M., Manzanero, J., Ferrer, E., Rubio, G., & Valero, E. (2019). A p-multigrid strategy with anisotropic p-adaptation based on truncation errors for high-order discontinuous Galerkin methods. Journal of Computational Physics, 378, 209-233.

Rueda-Ramírez, A. M. (2019). Efficient Space and Time Solution Techniques for High-Order Discontinuous Galerkin Discretizations of the 3D Compressible Navier-Stokes Equations(Doctoral dissertation, Universidad Politécnica de Madrid).

Shen, J. (1996). On a new pseudocompressibility method for the incompressible Navier-Stokes equations. Applied numerical mathematics, 21(1), 71-90.

E Ferrer, G Rubio, G Ntoukas, W Laskowski, OA Mariño, S Colombo, A Mateo-Gabín, F Manrique de Lara, D Huergo, J Manzanero, AM Rueda-Ramírez, DA Kopriva, E Valero (2022). HORSES3D: a high-order discontinuous Galerkin solver for flow simulations and multi-physics applications. arXiv preprint arXiv:2206.09733